Your Best People Are Making Their Worst Decisions

I have been watching brilliant professionals get blindsided by AI overconfidence. Then I discovered why—and built a framework to fix it.

When AI feels easy, mistakes get expensive.

I was reviewing work from one of the best strategists I'd ever worked with—someone who used to write briefs that made you feel something.

This time? The work was... fine. Competent. But hollow.

When I asked about the process, they said: "Oh, I used ChatGPT to speed things up."

That's when it hit me.

This person—brilliant, 15 years in the industry—had outsourced their judgment without realizing it. They trusted the AI output because it looked good. They didn't question it because the AI was so confident.

And here's the terrifying part: They had no idea their work had gotten worse.

I started digging. I realized I was watching a new cognitive phenomenon unfold in real-time—something I now call AI-Induced Dunning-Kruger Effect (AIADKE).

And it's happening in your organization right now.

The Hidden Cost of AI Overconfidence

Your people are doing worse work while feeling more confident about it.

In finance, a miscalibrated risk model could cost $10M+. In consulting, a flawed strategy could lose a $50M client. In legal, an AI-assisted contract error could trigger litigation.

The question isn't whether AIADKE is affecting your organization. It's how much it's already cost you.

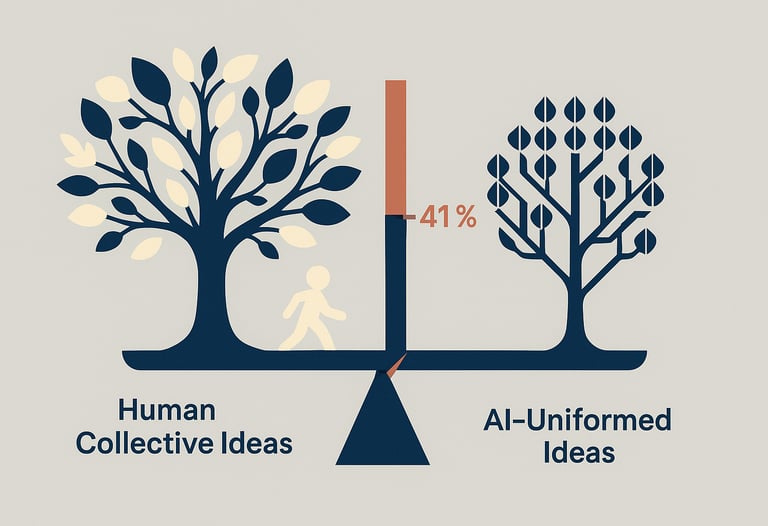

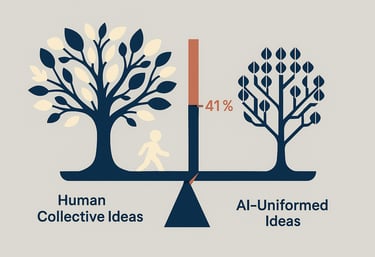

The Creativity Collapse

41% drop in idea diversity under uniform AI influence.

Generative AI standardizes expression — amplifying what’s familiar and silencing originality.

The cost is unseen: fewer breakthrough ideas.

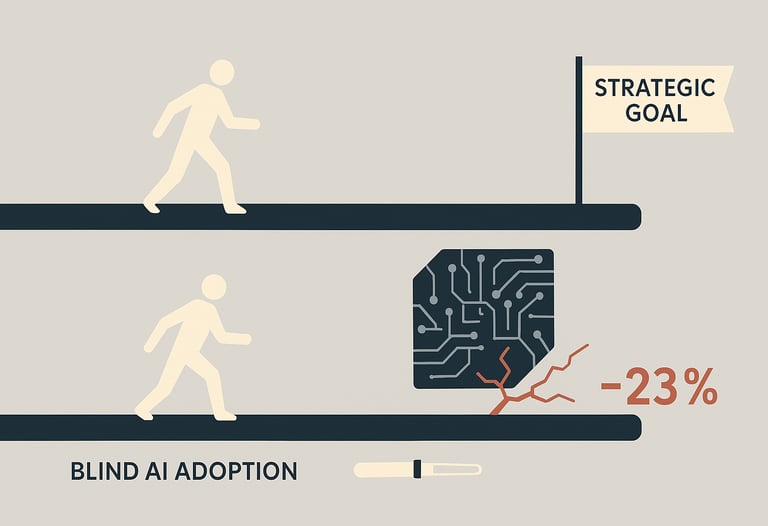

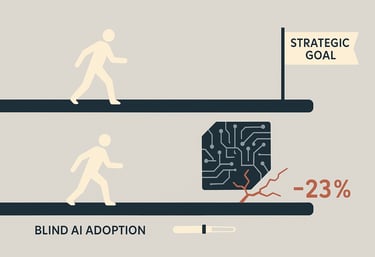

The Confidence Trap

23% worse on complex tasks with AI assistance.

When systems sound confident, humans stop questioning.

The result: reduced performance on problems that require independent reasoning and judgment.

Human Resonance: The Antidote to AIADKE

The solution isn't to ban AI. It's to recalibrate trust.

I built a framework that combines 20+ years of strategic practice with cognitive science research from Harvard, MIT, and Stanford—designed to protect human judgment in the age of AI.

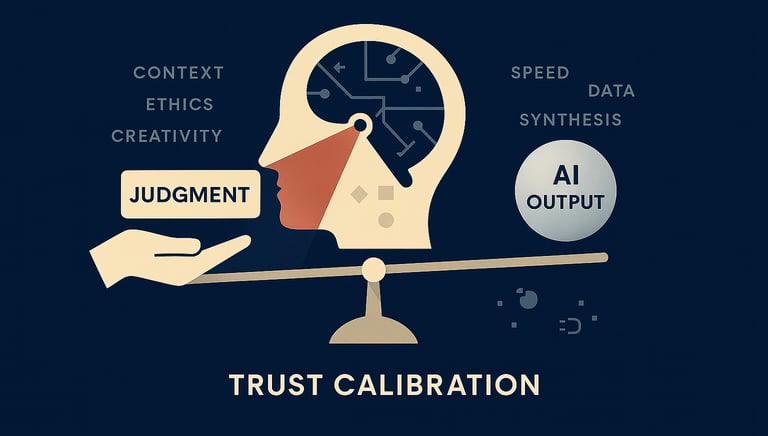

Trust Calibration

Knowing when to trust AI (and when not to). Building decision protocols that match AI capability to task complexity, leveraging AI's strengths without over-relying on it.

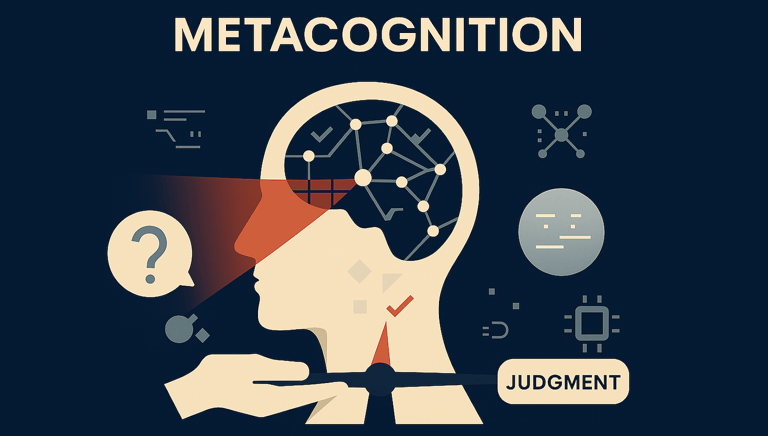

Metacognition

Teaching your brain to audit itself. The ability to recognize when you're outsourcing judgment, catch cognitive biases in real-time, and distinguish between AI fluency and AI accuracy.

About The Creator

I've spent 20+ years in advertising and strategy, working across agency and consulting environments. When I saw AI starting to erode the judgment of brilliant people, I dove into the cognitive science to understand why—and built a framework to fix it.

The AIADKE model is the result of that work: combining research from leading institutions with real-world interventions that protect human capital in the age of AI.

I'm not a psychologist. I'm not an academic. I'm a practitioner who saw the pattern, connected the dots, and built a solution that works.

Robert Charles Hutchison

Creator of Human Resonance in the Age of AI

Metacognitive AI Strategy | Trust Calibration Expert

Get The CEO's Field Guide to AI Overconfidence

Learn how to identify AIADKE in your organization, assess your cognitive risk, and implement a protocol you can use tomorrow.

Or book a free 30-minute AIADKE Risk Assessment to map your organization's cognitive vulnerabilities.

Human Resonance in the Age of AI

Metacognition | Trust Calibration | Cognitive Resilience

© 2025. Robert Charles Hutchison. All rights reserved.

Contact: rob@humanresonanceai.com